Developing Classifications

Data classification is at the core of Structured Discovery's functionality. It automatically identifies what types of personal data exist in your connected systems, helping you:

- Maintain an accurate Data Inventory for compliance requirements

- Identify sensitive data locations that may require special handling

- Support data minimization by highlighting unnecessary data collection

Transcend uses multiple classification methods to accurately identify data categories, applying them in sequence for the best possible results.

Structured Discovery employs an ensemble of five methods for classifying data, applied in the following order:

- Property Name Matching: Identifies data categories based on column/field names

- Regular Expression Content Matching: Uses pattern matching to identify data in specific formats

- Sombra™ Large Language Model (LLM): Uses AI within your environment to analyze data content

- Transcend-Hosted Metadata-Only LLM: Uses AI to analyze metadata without accessing content

- Random Forest ML Model: (deprecated) A legacy classifier which has been phased out in favor of LLM-based approaches

This sequential approach is designed for both efficiency and accuracy. We begin with simpler, more computationally efficient methods for straightforward classifications, and only progress to more complex methods when needed:

- Optimize Resource Usage: Simple methods like Property Name Matching require minimal computational resources and can quickly identify common data types.

- Progressive Complexity: Each subsequent method employs more sophisticated analysis techniques, using increasingly detailed information about the data.

- Fall-Through Design: If a datapoint isn't classified with high confidence by one method, it "falls through" to the next, more nuanced method.

- Balance Speed and Accuracy: This approach ensures we use the least intensive method capable of making an accurate classification.

This method examines the name of each field (column) and matches it against known data categories. For example:

- A column named

emailis classified as containingEMAIL - A field called

birth_datematches theDATE_OF_BIRTHcategory - Common variations and format differences are considered

- System-specific prefixes and suffixes (like Salesforce's

__c) are accounted for

Property Name Matching provides high specificity (few false positives) but lower sensitivity (may miss some matches). It typically achieves about 72% accuracy but requires no access to the actual data content.

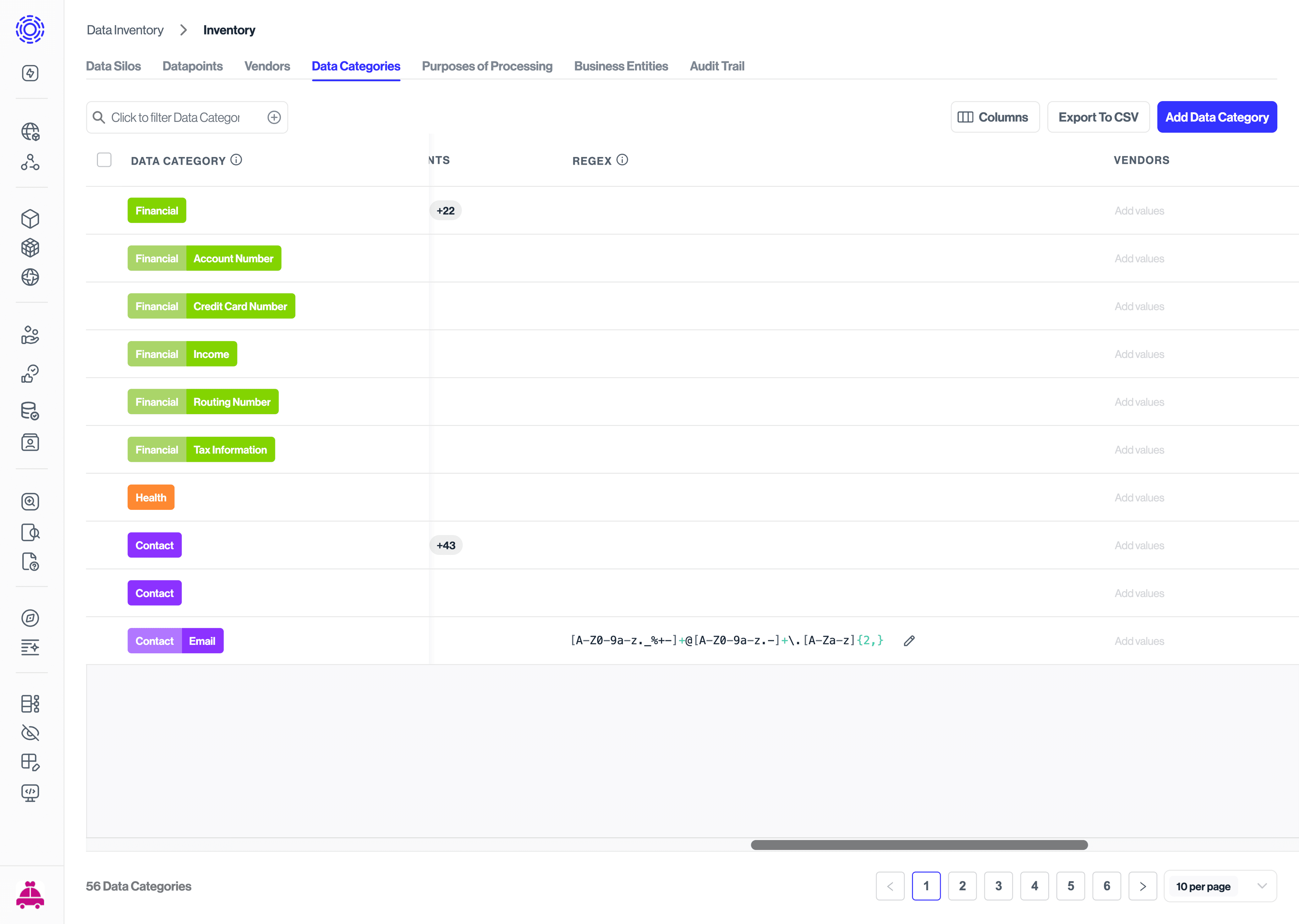

You can define custom pattern-matching rules to identify specific data formats. To manage these patterns:

- Navigate to the Data Inventory > Data Categories page

- Select a data category

- Add or edit regular expressions for that category

Best practice: Create specific patterns that avoid false positives. For example:

- Good:

/[A-Z0-9a-z._%+-]+@[A-Z0-9a-z.-]+\.[A-Za-z]{2,}/for email addresses - Problematic:

\d+-\d+-\d+-\d+for phone numbers (too generic, would match many non-phone numbers)

These custom regular expressions work for both Structured and Unstructured Discovery. Columns matching your patterns will appear with "Regex Matching" as the classification method.

For data not classified by the previous methods, our advanced LLM classifier analyzes both the metadata and content samples. This model:

- Runs within your environment for maximum data security

- Analyzes up to 200 characters of sample data

- Considers column names, table context, and data patterns

- Achieves up to 82% classification accuracy

This approach requires:

- Minimum Classifier version 3.0.6 and Sombra version 7.226.3

- GPU-enabled infrastructure (recommended: AWS

g2.5xlargeinstance)

See our Hosted LLM Classifier documentation for deployment details.

For organizations not ready to host their own LLM, we offer a cloud-based classification option that:

- Analyzes only metadata (table and column names, descriptions)

- Does not process actual data content

- Achieves up to 70% classification accuracy

- Requires no additional infrastructure

This becomes the fallback if the self-hosted LLM is unavailable. While effective, it may occasionally misclassify fields where the name is ambiguous without seeing sample data.

Our legacy classification approach used a statistical machine learning technique called random forests. We're phasing out this method, but you may still see some predictions from it in your results.

Each classification method uses different information sources, as summarized below:

| Method | Datapoint Name | Datapoint Description | Category Description | Data Content Samples |

|---|---|---|---|---|

| Property Name Matching | ✓ | |||

| Regular Expression Content Matching | ✓ | |||

| Self-Hosted Large Language Model | ✓ | ✓ | ✓ | ✓ |

| Transcend-Hosted Metadata-Only LLM | ✓ | ✓ | ✓ |

As our classification methods improve, they're automatically applied to unconfirmed datapoints (those without manually confirmed categories). New classification guesses are added to existing unconfirmed guesses.

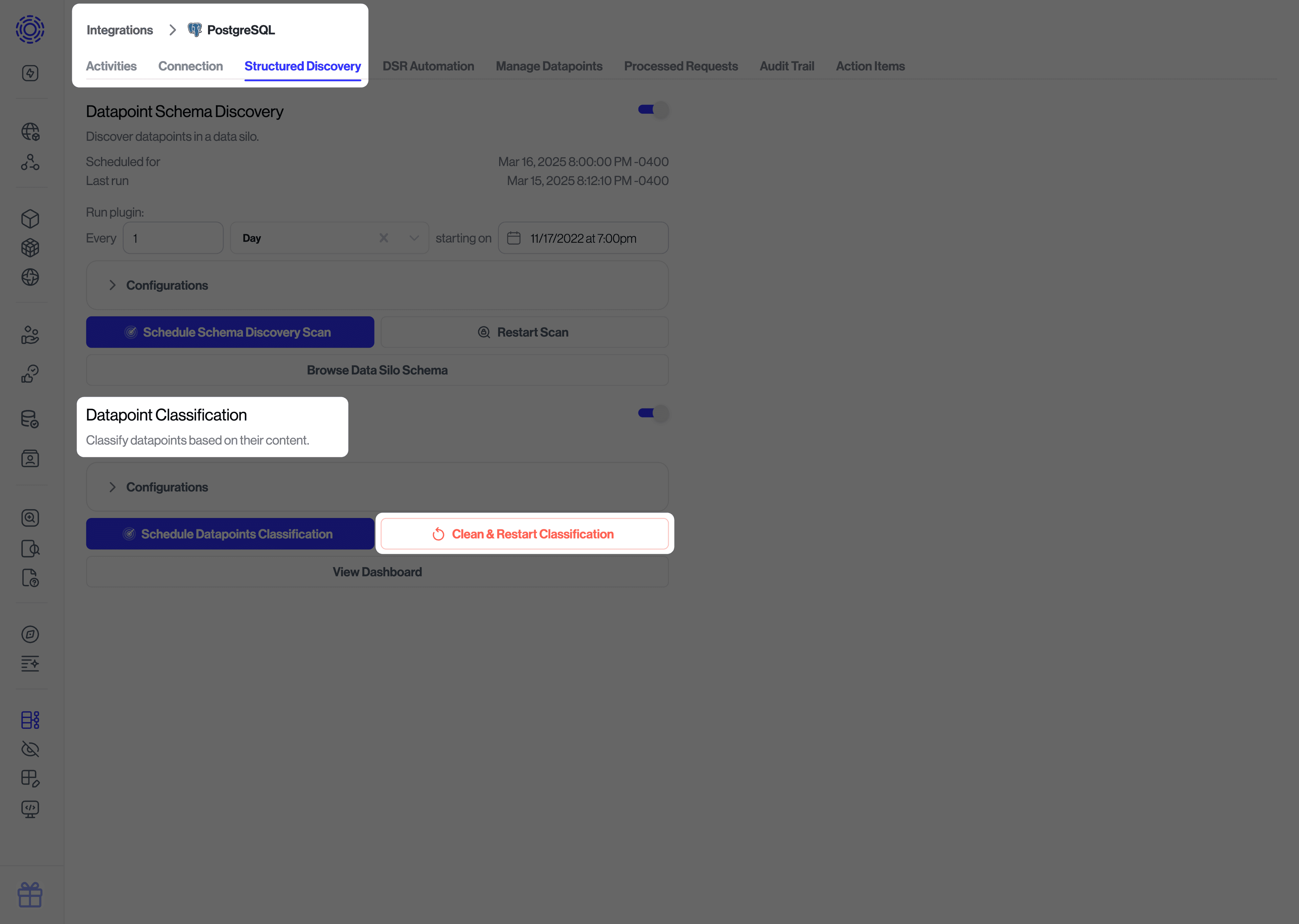

If you need to refresh classifications:

- For an entire data system: Go to the integration page, select Structured Discovery and click Clean & Restart Classification

- For an individual column: Hover over the column name and click the Resample and reclassify column icon